It must be case study season at Google. My feeds and inbox are suddenly flooded with PDFs and carousels showcasing incredible results from massive brands. We see huge numbers from Nissan, L’Oreal, and others, followed by a guide on holiday “best practices.”

It must be case study season at Google. My feeds and inbox are suddenly flooded with PDFs and carousels showcasing incredible results from massive brands. We see huge numbers from Nissan, L’Oreal, and others, followed by a guide on holiday “best practices.”

I recently sat down with former Googler Jyll Saskin Gales to break some of these down, and it confirmed a long-held suspicion: these case studies are rarely as straightforward as they seem. When I see a case study from a brand like Nissan, I don’t always know what to do with it. So, let’s deconstruct a few and figure out what the average advertiser can actually take away from them.

Before we dive in, let’s get one thing straight: we aren’t doubting the numbers themselves. Google isn’t faking data. These figures are run through multiple layers of verification. The numbers are always correct. The problem isn’t the numbers; it’s the story they’re telling and, more importantly, the parts they leave out.

Go Beyond the Article

Why the Video is Better:

- See real examples from actual client accounts

- Get deeper insights that can’t fit in written format

- Learn advanced strategies for complex situations

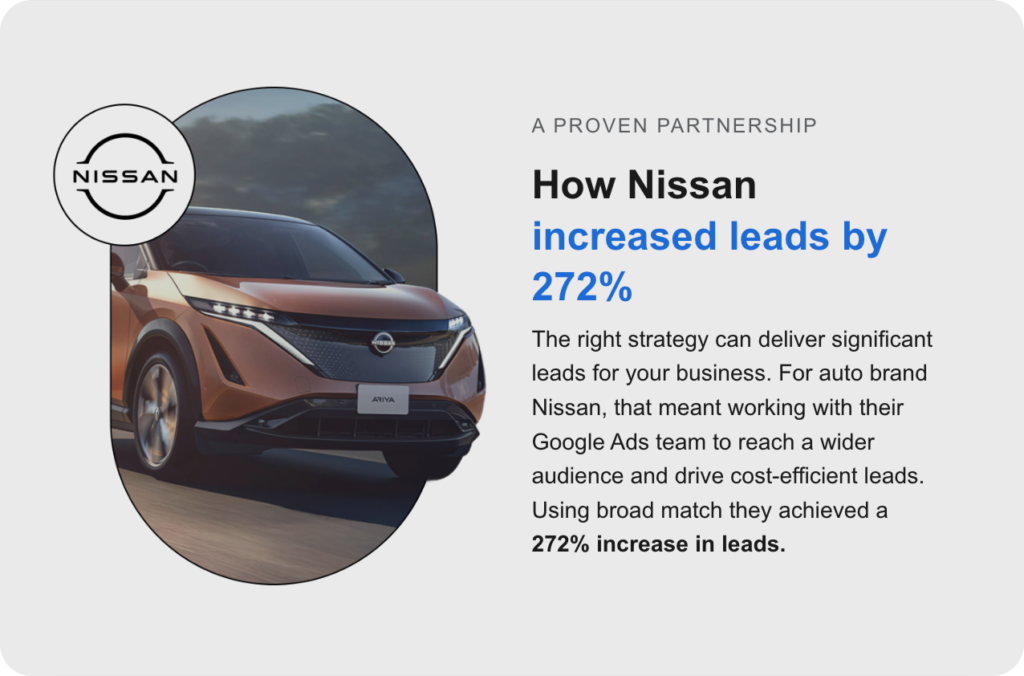

Deconstructing the Nissan Case: 272% More Leads with Broad Match?

First up is a real head-scratcher. Nissan apparently increased leads by 272% just by using broad match. My first reaction, and Jyll’s, was the same: there has to be more to this story. For an existing advertiser of Nissan’s scale to 3x or 4x their lead volume from a single keyword change is practically unheard of.

The issue here is that the case study presents a single, massive number with zero context.

What’s the baseline? That’s the real question.

Google wants you to believe that flipping the switch on broad match was solely responsible for this lift. But that’s never the full picture. My first question is always: what are we comparing this to?

- What was the time period? A 272% increase week-over-week during a major promotion is very different from a sustained, year-over-year lift.

- Were they using Smart Bidding before? Moving from manual bidding to a Smart Bidding strategy combined with broad match could account for a significant jump. The bidding is a huge part of the equation.

- What other changes were made? Did they remove a massive list of negative keywords that was strangling the account? Did they suddenly start bidding on their own brand name where they hadn’t been before?

I have never seen a decently run account achieve anything close to these numbers by simply adding broad match. We test it all the time. A 10% lift would be interesting. 272% just tells me the “before” state was likely a mess.

Quality vs. Quantity: Not all “leads” are created equal

The second red flag is the definition of a “lead.” Did the definition change? For a company like Nissan, a “lead” could be a simple form fill, or it could be dealership-level store visit tracking. If they suddenly started counting store visits as a lead conversion (which you shouldn’t, as they aren’t sales), you could easily see numbers like this.

You too could get a 272% increase in “leads” by turning on the Display Network and Search Partners in your Search campaigns. It doesn’t mean you’ll see any increase in actual sales. Without knowing the impact on lead quality and sales, this number is just a vanity metric.

The takeaway: Be extremely skeptical of single-metric case studies. Should you test broad match? Maybe. But don’t expect these kinds of results unless your account has significant foundational problems to begin with.

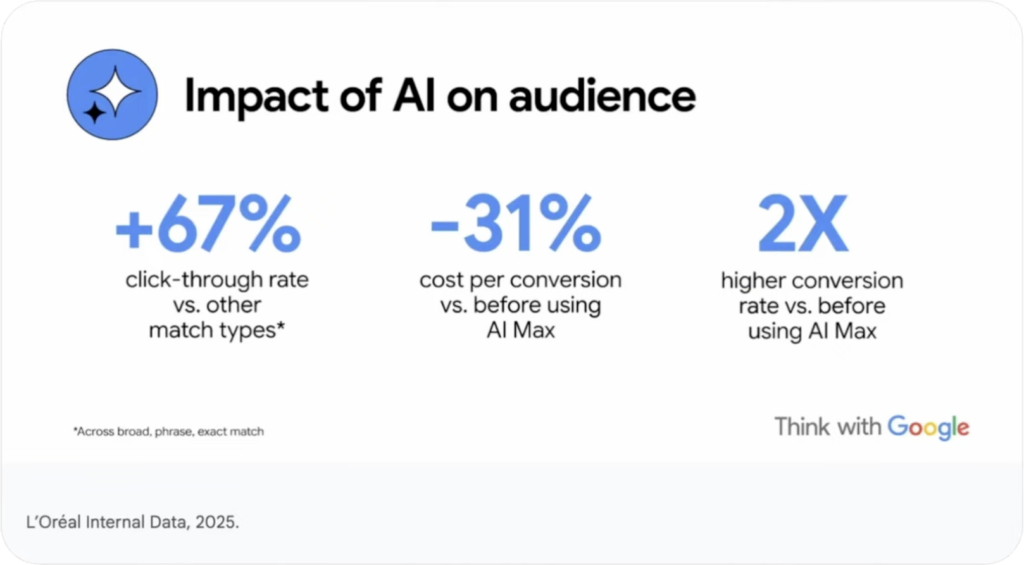

Analyzing the L’Oreal AIMax Study: Impressive Stats, Hidden Context

Next, we have L’Oreal, which added AIMax (Google’s AI-powered asset creation and targeting for Search) and saw some staggering results:

- 67% higher click-through rate

- 2x higher conversion rate

- 31% lower CPA

Unlike the Nissan example, Google gave us a lot more data here, which I appreciate. It’s a full appetizer, not just a tiny bone. But even with more data, the context is what matters.

Is AIMax the hero, or was the original setup flawed?

The case study mentions that AIMax helped L’Oreal capture new searches like “best cream for facial spots.” This immediately raises a question: if they were already running broad, phrase, and exact match, why weren’t they hitting a query like that already? It’s not some obscure, AI-generated search term; it’s a standard, high-intent query.

This suggests that the real value might not have been the keywordless targeting, but rather the text customization and final URL expansion. AIMax matched a specific ad and landing page to that query, which is something Dynamic Search Ads (DSAs) have been doing for years. It makes me wonder if AIMax is just a new wrapper for existing capabilities.

Personally, I think the ad copy component of AIMax has potential once they get it right. But in its current state, it feels rushed. The results we’ve seen are mostly cannibalization of keywords that are already targeted elsewhere in the account.

Volume is what matters, not just efficiency metrics

The headline stats are about efficiency (CTR, CVR, CPA). But what we all really want from new features is more profitable volume. Digging deeper into the study, we see the real impact number: a 27% lift in overall conversion value for the campaign. A 27% lift is good. It’s a solid win. But it’s a far cry from the “2x higher conversion rate” in the headlines.

This tells me that while some product categories saw huge gains (glycolic products doubled conversions), the overall impact was more modest. It also implies that for this to be a win, L’Oreal had to be a massive, sophisticated advertiser that was already maxed out on its existing Search, Shopping, and PMax opportunities. This case study doesn’t apply to 99% of advertisers.

The takeaway: Even detailed case studies are designed to sell a product. Look past the efficiency metrics and find the real business impact number (like conversion value). Then, ask yourself if your business shares the same context as a multi-million dollar advertiser like L’Oreal.

Google’s “Best Practices”: Separating Advice from Sales Pitch

Finally, let’s look at Google’s Holiday Guide. It’s full of general advice, and just because it comes from Google doesn’t automatically make it bad. But you have to put your critical thinking cap on.

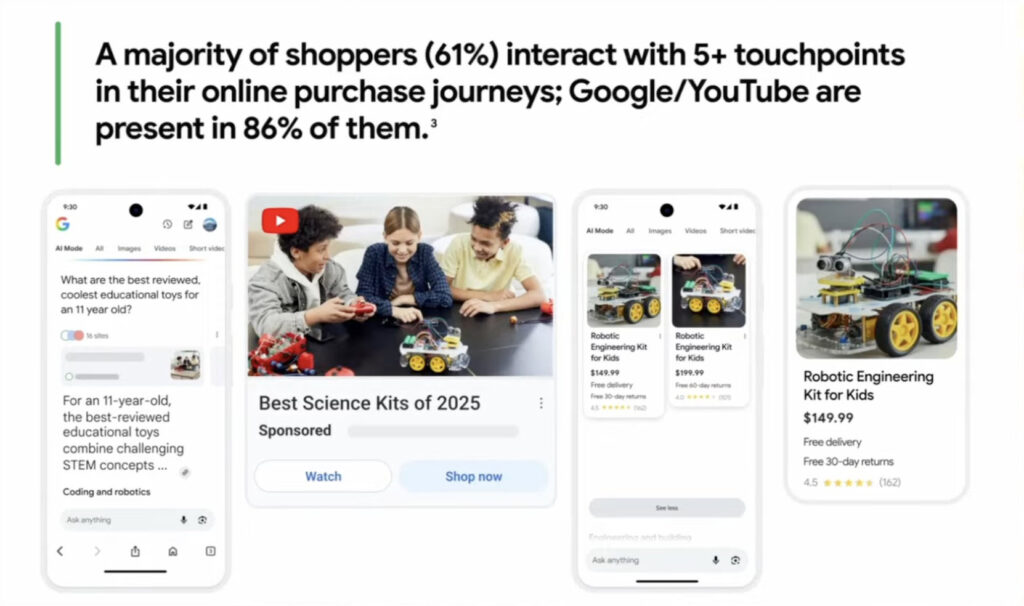

The YouTube Push: A valid point buried in jargon

Google is pushing the Search + YouTube combination hard, and for good reason. YouTube is probably the most underutilized advertising platform today, and it plays a huge role in the purchase journey. The advice to “engage users earlier in the season” is valid, especially for a channel like YouTube.

Of course, the advice to “start advertising earlier” and “keep your ads on later” conveniently benefits Google’s revenue. But the underlying principle is sound. You need a longer time horizon for upper-funnel activities to pay off.

Retention Goals: A good idea that’s hard to execute in Search

The guide also talks about using goals for high-value customers and retention. I struggle with how to apply this effectively in Search. The concept makes sense for a subscription business where you might run a competitor campaign targeted only to your customer match list, trying to prevent churn.

But for most e-commerce businesses, it’s a solution in search of a problem. Jyll brought up a great niche example of a client selling used children’s clothing. Running Shopping Ads to a broad audience was unprofitable because people wanted new clothes. But by using RLSA and targeting only previous website visitors, the ads became profitable because that audience already knew the store sold used items.

It’s a clever application but highlights how specific the circumstances need to be for this to work. It’s not a broad, evergreen strategy for most advertisers.

So, What Should You Actually Do?

After all this, you might be wondering what to trust. To be honest, I don’t get much value from these polished, big-brand case studies. They lack the context needed to be genuinely helpful.

If you’re looking for resources from Google, my favorite is the most boring one: the Google Ads Help Center. Reading the official technical documentation about how AIMax or Smart Bidding actually works will be immensely more helpful than reading a marketing case study about it. If the documentation is too dense, that’s where practitioners like us come in, breaking it down into something digestible and useful.

The goal isn’t to dismiss everything Google says, but to approach it with healthy skepticism. Understand the context, question the baseline, and always look for the number that reflects real business impact, not just a flashy marketing stat.

[TL;DR]

- Question the Baseline: A massive performance lift (like Nissan’s 272%) often says more about a flawed starting point than the effectiveness of a single new feature.

- Context is Everything: Results from a massive advertiser like L’Oreal, who may have already maxed out other channels, are not applicable to the average business.

- Look for Volume, Not Just Efficiency: High CTRs and low CPAs are nice, but the most important metric is the actual lift in profitable business outcomes like conversion value or sales.

- Separate Advice from Sales: Google’s “best practices” often align with its business goal (more ad spend). While the underlying logic can be sound (e.g., YouTube needs time), always analyze it through the lens of your own business needs.

- Read the Manual, Not the Brochure: The most valuable information is in the technical Google Ads Help Center documentation, not the polished marketing case studies.